Text Query to Search for Images in a Database

Text to Search for Images in a Database involves converting the text query into a vector in the same feature space as...

Text Query to Search for Images in a Database.🔗

This process involves converting the text query into a vector in the same feature space as the image vectors and then comparing these vectors to find the best matches. Let's go through the steps with an example:

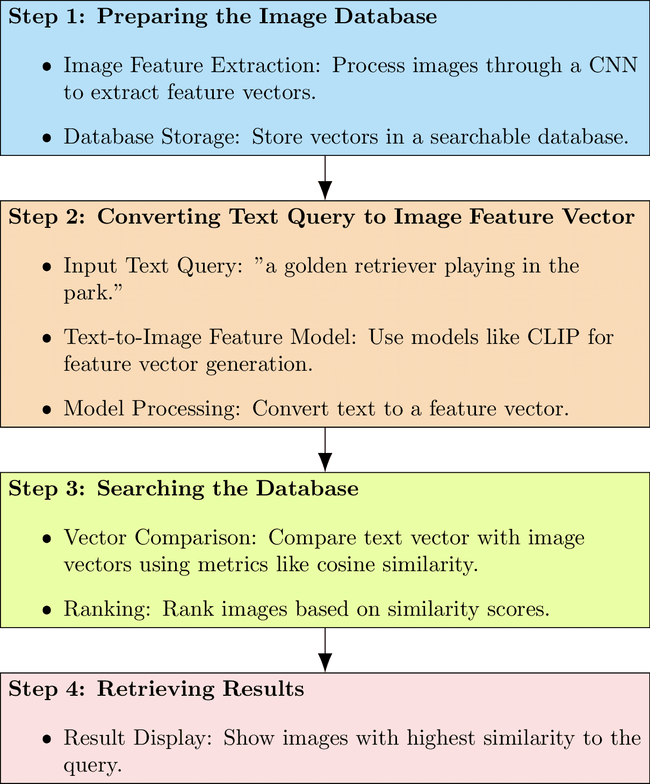

Step 1: Preparing the Image Database🔗

- Image Feature Extraction: Each image in the database is processed through a CNN, and a feature vector is extracted. These vectors capture the visual content of the images.

- Database Storage: The feature vectors are stored in a database, forming the searchable image dataset.

Step 2: Converting Text Query to Image Feature Vector🔗

- Input Text Query: Suppose your text query is "a golden retriever playing in the park."

- Text-to-Image Feature Model: To convert this text into an image feature vector, you need a model trained to understand text and generate a corresponding image feature vector. This can be a cross-modal model like CLIP (Contrastive Language–Image Pretraining) by OpenAI, which is trained on a variety of image-text pairs and can map text descriptions and images to a shared feature space.

- Model Processing: The model processes the text query and outputs a feature vector that represents the textual description in the same feature space as the image vectors.

Step 3: Searching the Database🔗

- Vector Comparison: The feature vector from the text query is compared to the feature vectors of images in the database. This comparison typically involves a similarity metric like cosine similarity.

- Ranking: The images are ranked based on the similarity scores, with higher scores indicating a closer match to the text query.

Step 4: Retrieving Results🔗

- Result Display: The top-ranked images, those with feature vectors most similar to the text query vector, are retrieved and displayed as the search results.

Example🔗

- With the query "a golden retriever playing in the park," the text-to-image feature model generates a vector that represents this concept.

- The system then finds images whose vectors are closest to this query vector, likely resulting in images of golden retrievers, parks, and scenes of play.

Conclusion🔗

Using a text query to search for images in a feature vector-based database involves converting the text to a compatible feature vector and then performing similarity-based retrieval. This is indeed possible with advanced models trained for cross-modal (text and image) understanding and representation. The key is having a model capable of mapping text descriptions to a visual feature space that aligns with the feature vectors extracted from images.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!